James Shore writes about two kinds of documentation and agile development.

James Shore writes about two kinds of documentation and agile development.All of us who have tried to convince someone to value agile methods over plan-driven processes have encountered the phrase "but we need documentation". I really saw the light while reading Mr. Shore's article. It has been clear to me, but division of documentation into these two gatecories gave me the simple structure I have been looking for;

1. Get Work Done

2. Enable Future Work

The following situation is not too uncommon. An embedded system project is getting closer to a process gate. All of a sudden it is time to write documentation because documents are "demanded by the process". This is ridiculous! Process does not demand or want anything, even less likely it is going to NEED anything. Dominating parts in a project system are the people, not the process. What do they NEED in order to progress effectively? That's correct from the back row - communication. Everyone agrees with that, but why is communication so strongly associated with paper document in engineering, or project management? Are we still paying the price of the stereo type created decades ago, that engineers can not communicate? If so, what makes project managers think that engineers could write?

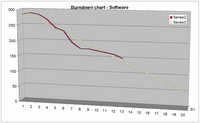

So back to the project. Project has built working prototypes with incremental development and short time-boxing in cross-disciplined engineering teams. Electronic schematics, part lists, price estimates, firmware, mechanical drawings, thermal management results, power handling results, EMC data etc. are available as natural outcome of experimenting. What is missing if we need to COMMUNICATE the project, its status, the risk etc.?

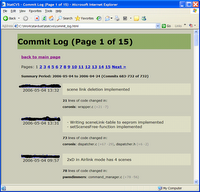

I heard that! "Documentation" you whispered. This is what the process demands, and for some werd reason this is interpreted as Word documentation. So, the team gets into this unproductive mode and writes Word ducumentation at velocity of 2pg/month. Gate passed, everyone happy?

Dead wrong. The first downside is that now the documentation is done. We proceed with prototypes and create more new knowledge on detailed level. This is the spearhead of new knowledge created in this project. Unfortunately since time is wasted at early stages we will be in a hurry towards the end of the project. This means documentation at this crucial stage, at the end, has less focus. Not to mention the frustrated engineers that have already documented a lot without any feedback (the dinosaur process just swallows the papers). It is harder to convince them about the importance of documentation at this later stage. This documentation, which James Shore called Enable Future Work -documentation, remains missing in many so called traditional projects.

The second downside comes from the fact that we actually now have the Get Work Done documentation, but not the high quality Enable Future Work -documentation. This documentation is passed to the next project team as a starting point for their work. This documentation contains obvious facts, which are worthless. More importantly it has flaws and speculations which were found wrong during further experimentation. Unfortunately this new information typically never gets updated into these documents. So there is lots of information that is not true and does not go one-to-one with the actual final design. The new team now spends more time figuring out whether to believe the document or the design. That is if they are not experienced enough to always go with the actual design.

My Three Day Faceoffs -post describes the power of face-to-face communication over paper document in practice.

Get Work Done -(Word) documentation is concidered harmfull.